Kevin Angers

I’m a first year Master’s student at the New York University Tandon School of Engineering studying Electrical Engineering.

Previously, I graduated from the Engineering Science program at the University of Toronto, where I majored in Robotics Engineering and minored in Machine Intelligence. While at UofT, I had the opportunity to work with Prof. Florian Shkurti (affiliations: UofT Robotics Institute, Vector Institute, Acceleration Consortium) at the Robot Vision and Learning (RVL) Lab on generalizable end-to-end robot manipulation.

In addition, I did a 16-month research internship at the Matter Lab supervised by Prof. Alán Aspuru-Guzik (affiliations: Vector Institute, Acceleration Consortium, CIFAR, NVIDIA), Prof. Milica Radisic (affiliations: UofT BME, UHN, Acceleration Consortium), and Prof. Florian Shkurti where I developed RoboCulture; a general-purpose robotics approach for laboratory automation.

I was also a member of aUToronto for three years, the University of Toronto’s autonomous driving design team, where I worked on problems primairly involving 3D-object detection and localization.

I was also team capitan of the University of Toronto Varsity Blues Baseball Team, winning three Ontario University Athletics (OUA) championships. I was also a two-time winner of the OUA Most Valuable Pitcher award and proudly received the Frank Pindar Men’s Athlete of the Year award.

Education

| New York University Tandon School of Engineering |

| University of Toronto Robotics Major, Machine Intelligence Minor |

Publications

| RoboCulture: A Robotics Platform for Automated Biological Experimentation PAPER / PROJECT PAGE / CODE / POSTER |

Experience

| aUToronto – University of Toronto Self Driving Car Team 3D Object Detection I co-led the development of a deep learning–based 3D object detection system, integrating state-of-the-art architectures (e.g., PointPillars, CenterPoint, PV-RCNN) with extensive experimentation in data augmentation and model optimization using the OpenPCDet toolkit for real-time deployment on our autonomous vehicle. I also co-led a 100+ student 3D/LiDAR labeling effort and participated in domain adaptation research between Velodyne and Cepton LiDAR systems. aUToronto secured 1st place in the 2024 and 2025 SAE AutoDrive Challenge II against nine other North American universities. |

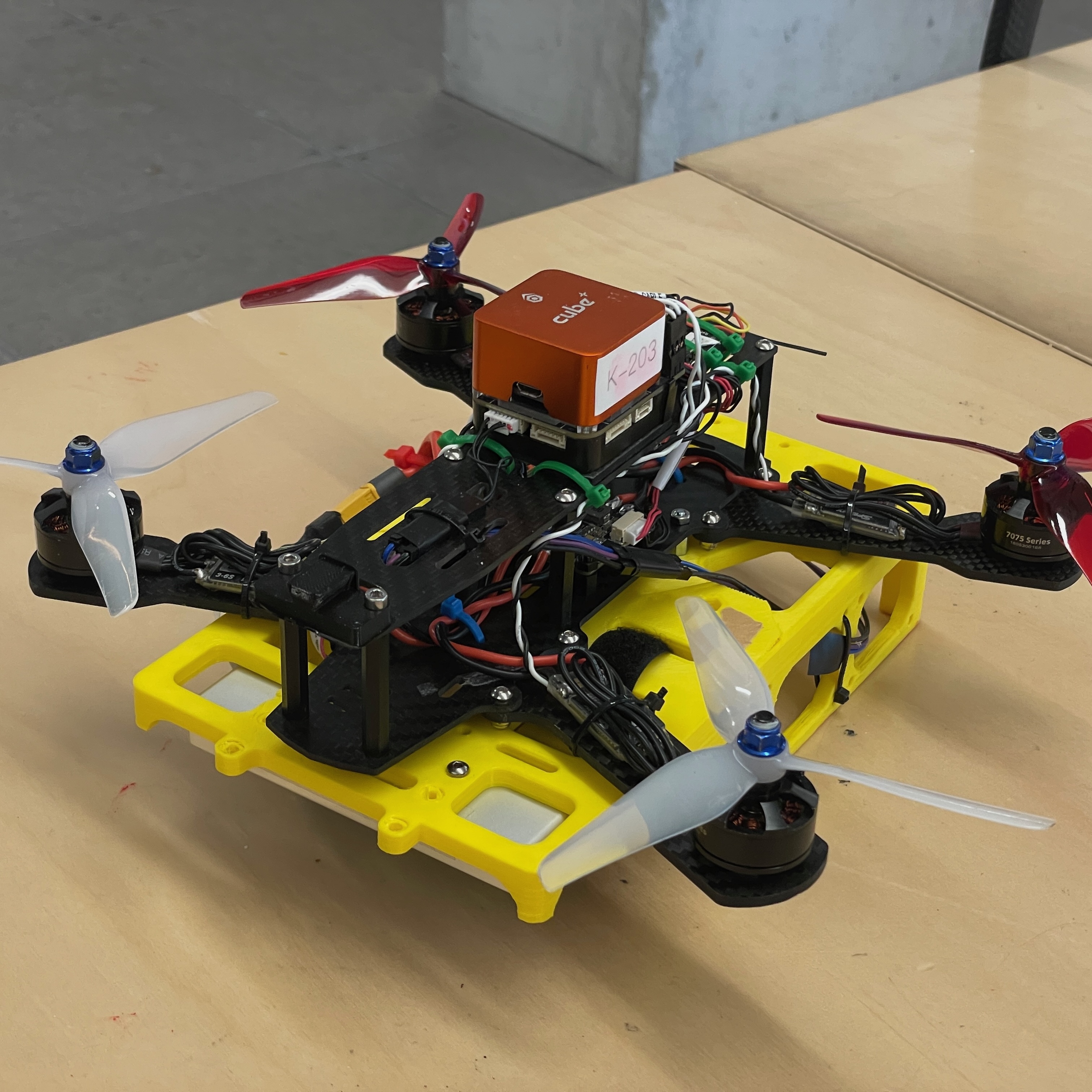

| Capstone Project – Vision-Based Autonomous Quadcopter UofT EngSci Robotics Capstone Built an autonomous indoor quadcopter system that uses real-time onboard vision (MobileNet-SSD on Jetson Nano), visual-servoing control, and a ROS 2 state machine to track and follow a fast-moving “getaway car” in a simulated police chase scenario. The project integrates perception, control, and high-level behaviour into a system that can detect, chase, and reacquire a target under tight compute constraints. |

| Undergraduate Thesis – “Extending AnyPlace Toward Unified Grasp and Placement for Generalized Robotic Manipulation” BASc Thesis at UofT Eng Sci, Supervised by Prof. Florian Shkurti I designed an end-to-end robotic pick-and-place system that unifies grasping and placement into a single diffusion-based visuomotor policy. I redesigned a traditional “grasp-then-place” pipeline into a large-scale demonstration collection system in Isaac Lab, using motion planning and simulation to automatically generate datasets of successful trajectories. These demonstrations were then used to train a RoboMimic diffusion policy that maps raw scene observations directly to feasible, task-aware action sequences, removing the need for hand-tuned pre-placement poses and brittle heuristic planning. |

Projects

| Lidar Mapping and Localization Course Lab Projects - ROB521 (Mobile Robotics and Perception) Code avaliable upon request I completed a sequence of ROS-based mobile robotics labs using a TurtleBot3 Waffle Pi in both simulation and real-world environments, where I implemented global path planning (RRT/RRT*), local trajectory-rollout control, and LiDAR-based occupancy grid mapping. I also experimented with particle-filter localization and SLAM (AMCL, Gmapping). |

| Motion Planning on KUKA Manipulator Course Lab Project – ECE470 (Robot Modeling and Control). Code avaliable upon request Implemented the artificial potential algorithm in MATLAB, simulating attractive and repulsive forces for a KUKA manipulator allowing it to grasp and place objects at desired points while avoiding obstacles. |

| Cart Pendulum Control Course Lab Project – ECE557 (Linear Control Theory). Code avaliable upon request Designed an output feedback controller to enable a cart-pendulum system to track a square wave signal while keeping the pendulum balanced in the vertical upright configuration. Utilized state-space control design principles to design an observer for the linearized system, simulated in Simulink, and tested on a physical system using an Arduino. |

| Bayesian Localization with Mobile Robot Course Lab Project – ROB301 (Introduction to Robotics). Code avaliable upon request Implemented Bayesian localization and Kalman filtering algorithms for accurate state predictions with noisy measurements, enabling robust navigation of a mobile robot across a topological map, simulating mail delivery. |

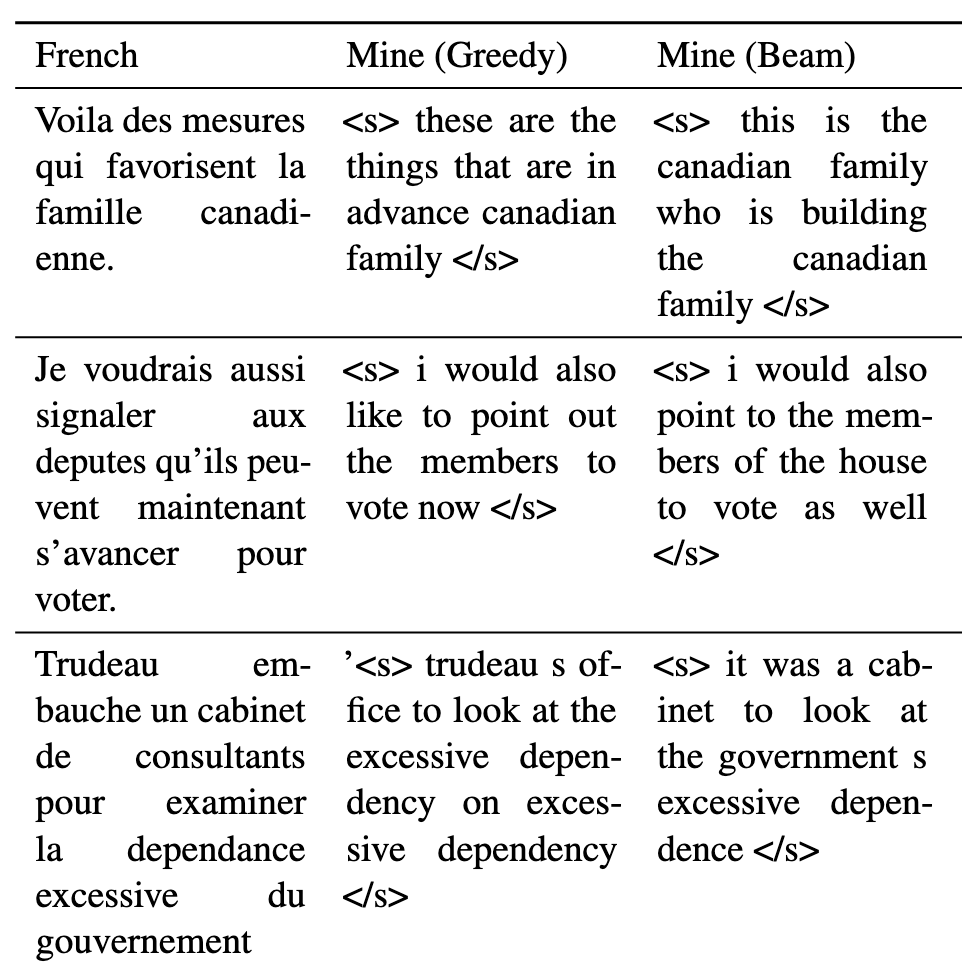

| Transformer Language Model from Scratch Course Project – CSC401 (Natural Language Computing). Code avaliable upon request Implemented a Transformer based language model from scratch in PyTorch for the task of machine translation, and trained on a corpus of English-French text for performance evaluation using the BLEU metric. |

| Globe Bot – A Voice Controlled Globe Course Project - MIE438 (Microcontrollers and Embedded Microprocessors) Ask the Globe Bot to point to any location, and it will spin and highlight the given location on the globe under a magnifying glass. |

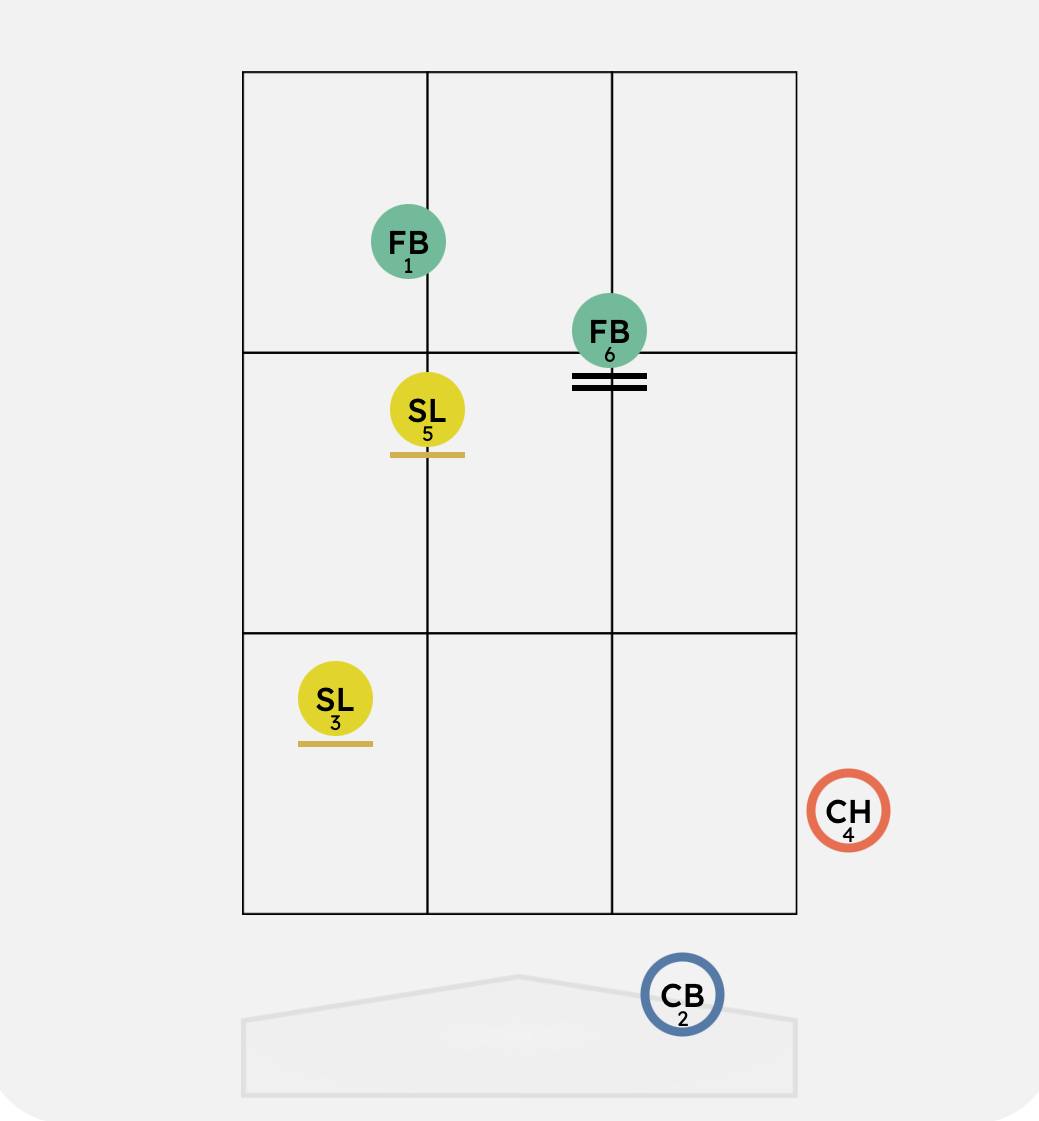

| PitChart - iOS App for Baseball Pitch Charting iOS app using SwiftUI for tracking sequences of pitches for player trend analysis with the UofT Baseball team. Record pitch location, type, result and type of contact. Pitch sequences are organized into at bats; track the pitchers and the batters throughout a game. Add new games and track players’ trends and matchups throughout a season. |